This Is Your Brain On Movies: Neuroscientists Weigh In On The Brain Science of Cinema

In movies, we explore landscapes far removed from our day-to-day lives. Whether experiencing the fantastical adventures of Star Wars or the dramatic throes of The English Patient, movies demand that our brains engage in a complex firing of neurons and cognitive processes. We enter into manipulated worlds where musical scores enhance feeling; where cinematography clues us into details we’d normally gloss over; where, like omniscient beings, we voyeuristically peek into others’ lives and minds; and where we can travel from Marrakech to Mars without ever having left our seat. Movies reflect reality, yet are anything but.

“Movies are highly complex, multidimensional stimuli,” said Uri Hasson, a neuroscientist and psychologist at Princeton University. “Some areas of the brain analyze sound bites, some analyze word context, some the sentence content, music, emotional aspect, color or motion.” Just as many people must come together to work on different elements of a movie’s script, score, visuals or costumes, he explained, so many areas of the brain must also be engaged in processing those disparate elements.

The relatively new field of neurocinematic studies seeks to untangle our neurological experience of film and, in doing so, learn not only the mechanisms behind movie watching but also how movies might teach us more about ourselves.

Thanks to new digital technologies and programs such as Final Cut Pro, researchers can easily create experimental films to tease out the science of movie watching. “For a long time, we were stuck with 16 millimeter film and videotapes, which were hard to manipulate and also expensive,” said Dan Levin, a professor of psychology and human development at Vanderbilt University. At the same time new technologies began to emerge, he said, interest in film-related studies started to pick up amongst cognitive scientists. “All of these forces converged to make studies of the science of cinema more possible,” Levin said.

Scientists first began investigating these ideas by studying viewers’ eye movements and visual processing. Psychologists found that in movies, for example, people often suffer a phenomenon called “change blindness,” or the inability to detect discontinuity between visual details. Researchers would cut away then return to an original shot, where only a photo in the background may be altered or one actor substituted for another. Yet on the whole, people tended to overlook these changes. “The little breaks in continuities didn’t draw attention, the little changes didn’t alert viewers that something was amiss,” Levin explained.

Viewers, those early researchers reasoned, must not be able to detect continuity errors in movies.

But Levin and his colleagues called this assumption into question, reasoning that people’s difficulty honing in on visual changes might extend beyond the big screen. Levin applied the equivalent of cinematic cuts to the real world to test this hypothesis. “There are interesting ways you can show that the experience of watching a film is not very different than the experiences encountered in every day life,” Levin explained.

To achieve this, he and his colleagues had experimental actors engage random people in conversation by asking for directions. Just after beginning their exchange, two more actors interrupted the conversation by pushing through the pair with a large wooden door they were carrying, which obscured the unknowing test subject’s view. During that brief break, another actor switched places with the original. The actors wore similar but visibly different clothes.

At the end of the exchange, the experimenters asked the subjects if they had noticed anything unusual about their talk, specifically: “Did you notice that I am not the person you were talking to before the door went by?” Out of 39 participants, almost 40 percent failed to detect that real-world switch of their conversation partner, just as people before them failed to detect that change on film. The actors then told the participants that they had unknowingly participated in an experiment on scene perception, and not to feel bad since many people miss changes like the one they had just experienced. “This supports the hypothesis that every day perceptional skills are not so different than the skills we use in watching a film,” Levin said. “We can use that observation to support further studies of the brain science of cinema.”

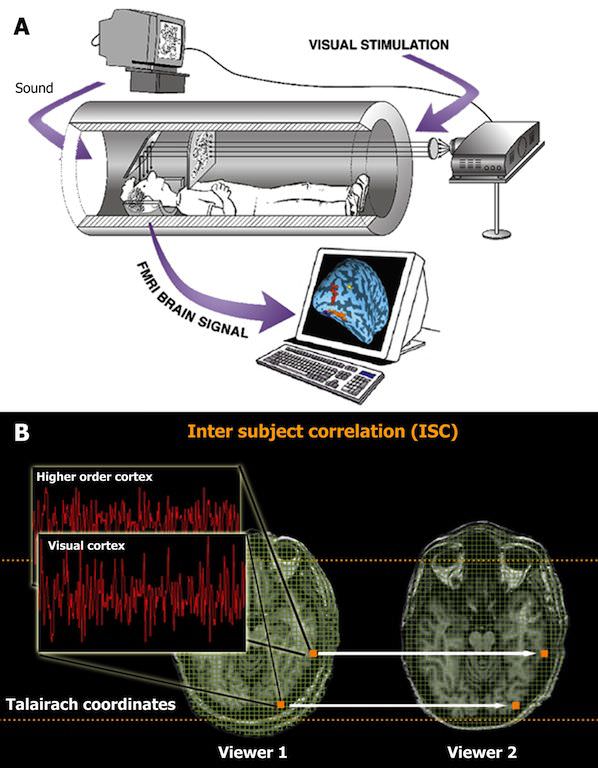

From visual studies, brain studies seemed a natural progression. Previous studies established that viewers tend to look at the same spots on the movie screen, but Hasson and his neuroscience colleagues wanted to dig more deeply beneath the surface to discover how movies unite viewers.

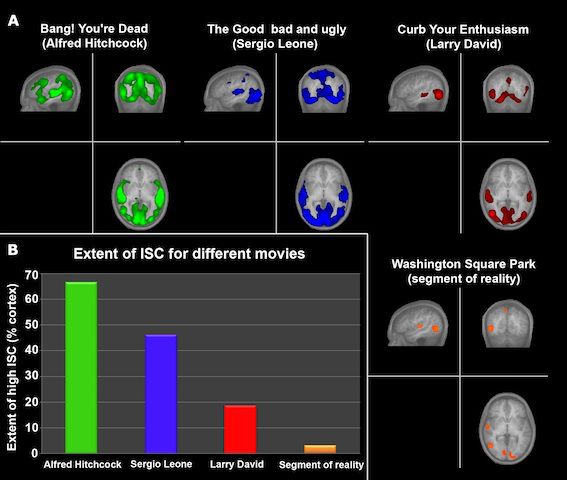

In one study, for example, they used functional magnetic resonance imaging—or a procedure that measures brain activity by detecting changes in blood flow—to watch volunteer’s brains as they viewed Sergio Leone’s The Good, the Bad and the Ugly, Alfred Hitchcock’s Bang! You’re Dead and Larry David’s Curb Your Enthusiasm. They also showed participants unedited clips of a random scene filmed in Washington Square park in New York City, which they thought would most closely reflect a cinematic experience of reality. They then compared all of the viewers’ neurological responses to calculate an inter-subject correlation, or the degree to which brain processes aligned between viewers watching the same film.

Not all movies elicited the same cranial reactions, they found. The highly structured Hitchcock film similarly engaged nearly 65 percent of the viewers’ cortexes (the outer layer of the brain that plays a role in processing memory, attention, awareness, thought, language and consciousness), for example, while the segments of reality taken from the park engaged the same responses in only about 5 percent of the viewers’ cortexes. The Good, the Bad and the Ugly ranked similar to Hitchcock, at 45 percent, while Curb Your Enthusiasm fell to 18 percent.

In other words, highly controlled films, in turn, exert a higher level of control on viewers’ minds than unstructured clips that are closer to our day-to-day experiences of reality. When Hitchcock directs our gaze to a bloody knife or an open door, we all look together. In real life, however, our attention is diverted, focusing at will on the guy with the newspaper, the woman wrestling with her shopping bags or the wind in the trees, with little rhyme or reason.

“Right now, you may be looking around your office, out the window, at the computer screen or at your coffee cup on the desk,” Hasson said. “In cinema, you don’t have this. The director will cut to close ups of what he wants you to look at, put other things out of focus and give you clues to guide your eye movements.”

Most of Hollywood prefers this degree of highly-controlled filmmaking, while something like the experimental, random films of Andy Warhol fall at the opposite end of that spectrum and are more akin to reality than to conventional movies. No two movies will generate the same brain response, however, though individual viewers will largely share a similar neurological experience when viewing the same film, especially if it follows conventional stylistic standards of the industry. “Think about movies as highly controlled experiments in which filmmakers control what you see, hear, expect, feel, predict and so forth,” Hasson said. “I think the most interesting thing these findings show us is how similar our brains are.”

These similarities run deep, he explained, applying across different cultures and languages, and may form the basis of our global communication and interactions. In other words: the common movie-viewing experience may help explain the attributes that unite us as humans.

This newly emerging field of neurocinematic studies is still in its infancy, but the researchers can see it branching into two different investigative fields. Firstly, scientists can use movies to understand behavior and the brain, as Hasson and Levin are doing. Secondly, researchers could flip that association around and use the brain to understand movies. Imagine a future, for example, in which viewers are shown a pre-release rough cut while the director peeks into their minds to see if his work is drawing out the desired response. “One might be able to tell a filmmaker, ‘Ok, people got lost in their visual cortex in minute 55,’” Hasson imagined, “Or that minute 37 evoked variable reactions to the dialogue.”

Of course, most film theory experts do not have neuroscience backgrounds, so this possibility has yet to be explored—but it is a possibility. The only question is whether or not directors would want to lift the lid on this neurological Pandora’s box. “Sometimes, artists feel that they don’t want these scientific tools as part of their artistic evaluations, and that’s fine,” Hasson said. “I’m concerned about basic brain function, not film theory.”